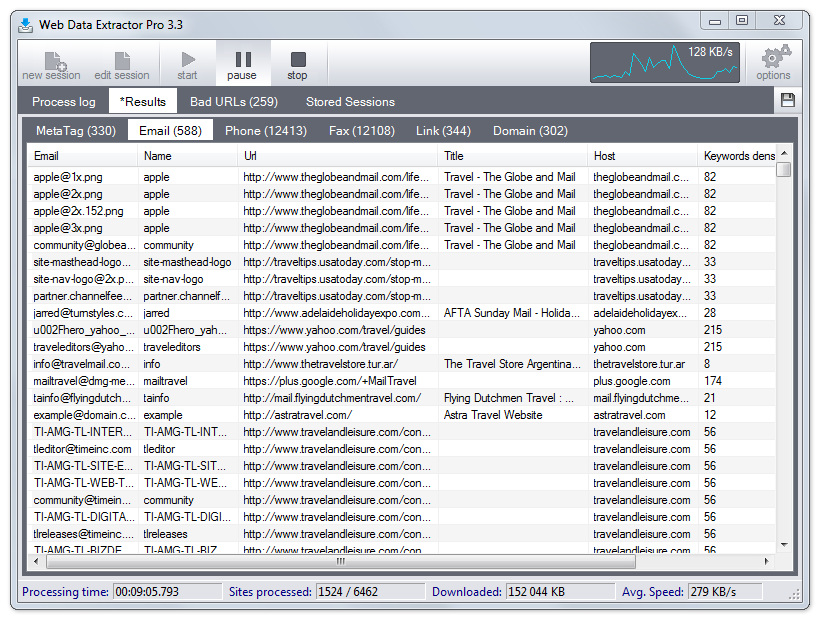

Select 2nd option "WebSite/Dir/Groups" - enter URL in "Starting Address" box - select Depth=0 and "Stay within Full URL" option. Or, lets say you want to extract data of all companies listed at Select 2nd option "WebSite/Dir/Groups" - enter this URL in "Starting Address" box - select "Process First Page Only" Lets say you want to extract data of all companies listed at WDE is powerful, if you want WDE to follow external sites with unlimited loop, select "Unlimited" in "Spider External URls Loop" combo box, and remember you need to manually stop WDE session, because this way WDE can travel entire internet.Ĭhoose yahoo, google or other directory and get all data from there. It will not follow all external sites found in (2) and so on. In this case, by default, WDE will follow external sites only once, that is - (1) WDE will process starting address and (2) all external sites found in starting address. If you want WDE to retrieve files of external sites that are linked from starting site specified in "General" tab, then you need to set "Follow External URLs" of "External Site" tab. # By default, WDE will stay only the current domain. What WDE Does: WDE will retrieve html/text pages of the website according to the Depth you specified and extract all data found in those pages. Select 2nd option "WebSite/Dir/Groups" - Enter website URL - Select Depth - Click OK

WebSites:Įnter website URL and extract all data found in that site However - some servers are case-sensitive and you should not use this option on those special sites. When you set to ignore URLs case, then WDE convert all URLs to lowercase and can remove duplicate URLs like above. Set this option to avoid duplicate URLs like For example: in above case, if an external site found like then WDE will grab only base It will not visit unless you set such depth that covers also milk dir. With this option you can tell WDE to process always the Base URLs of external sites.

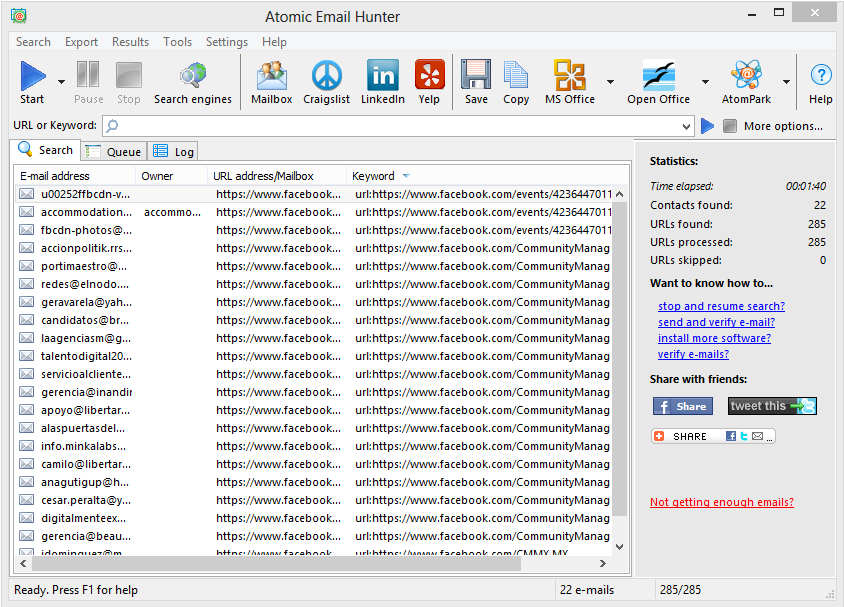

If you tell WDE to "Stop Site on First email Found then it will not go for other pages (#3-12) For example: you set WDE to go entire site and WDE found email in #2 URL (support.htm). So sometimes you may prefer to use "Stop Site on First Email Found" option. Some websites may have only few files and some may have thousands of files. Select "Depth=0" and check "Stay within Full URL"Įach website is structured differently on the server. Only matching URL page of search ( URL #6 ) WDE is powerful and fully featured unique spider! You need to decide how deep you want WDE to look for data. A setting of "1" will process index or home page with associated files under root dir only.įor example: WDE is going to visit URL for data extraction. A setting of "0" will process and look for data in whole website. If you want WDE to stay within first page, just select "Process First Page Only". Here you need to tell WDE - how many levels to dig down within the specified website. How many deep it spiders in the matching websites depends on "Depth" setting of "External Site" tab. Next it visits those matching websites for data extraction. WDE send queries to search engines to get matching website URLs. You can add other engine sources as well. Click "Engines" button and uncheck listing that you do not want to use. You can tell WDE how many search engines to use. What WDE Does: WDE will query 18+ popular search engines, extract all matching URLs from search results, remove duplicate URLs and finally visits those websites and extract data from there. Select "Search Engines" source - Enter keyword - Click OK WDE spiders 18+ Search engines for right web sites and get data from them. You can setup different type of extraction with this UNIQUE spider, link extractor: Powerful, highly targeted email spider harvester. It supports operation through proxy-server and works very fast, as it is able of loading several pages simultaneously, and requires very few resources. It has option to save extracted emails directly to disk file, so there is no limit in number of email extraction per session. It has various limiters of scanning range - url filter, page text filter, email filter, domain filter - using which you can extract only the addresses you actually need from web pages, instead of extracting all the addresses present there, as a result, you create your own custom and targeted bulk email list. It is an industrial strength, fast and reliable way to collect email addresses from the Web. WDE Email Extractor module is designed to extract highly targeted e-mail addresses from web-pages, search results, web dirs/groups, list of urls from local file for personalized b2b contact and communication.

0 kommentar(er)

0 kommentar(er)